Battaglia P W, Hamrick J B, Bapst V, et al. Relational inductive biases, deep learning, and graph networks[J]. arXiv preprint arXiv:1806.01261, 2018.

1. Overview

In this paper

- argue that combinational generalization must be a top priority for AI

- advocate integrative approaches

- explore how using relational inductive biases within deep learning architecture

- present graph network. strong relational inductive bias; manipulate structured knowledge and produce structured behaviors

- discuss how graph networks can support relational reasoning and combinational generalization

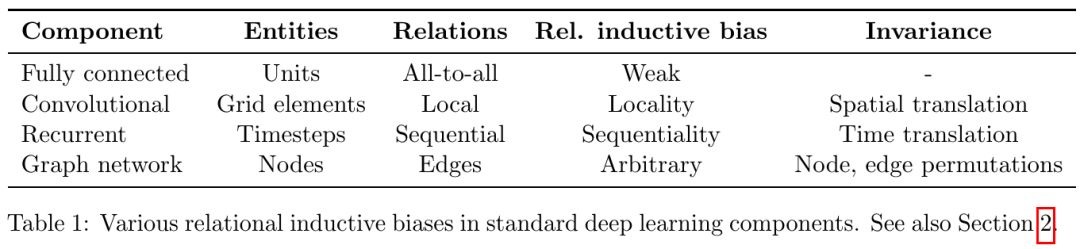

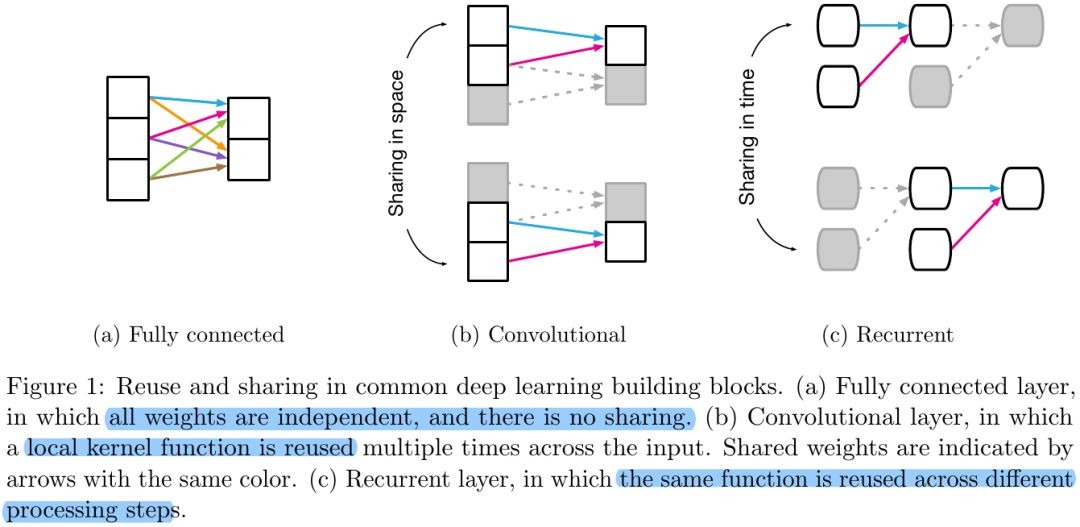

1.1. Relational Inductive Biases

impose constraints on relationships and interactions among entities in a learning process.

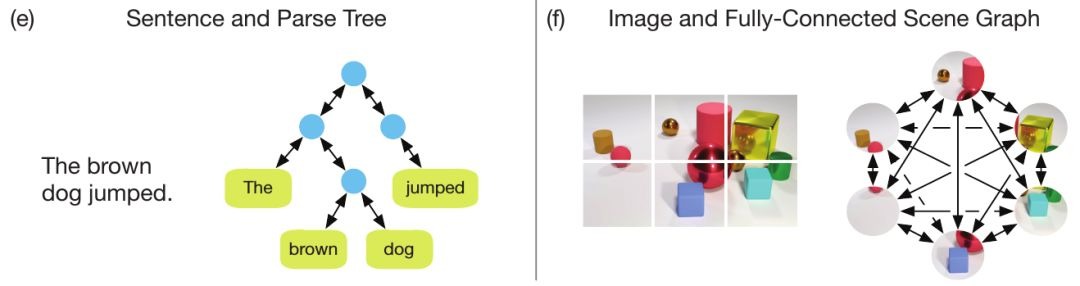

1.1.1. over Sets and Graphs

- Invariant to ordering

1.2. Graph Networks

- takes a graph as input

- perform computations over the structure

- return a graph as output

1.2.1. Strong Relational Inductive Biases

- express arbitrary relationships among entities

- invariant to permutation

- per-edge and per-node functions are reused across all edges and nodes

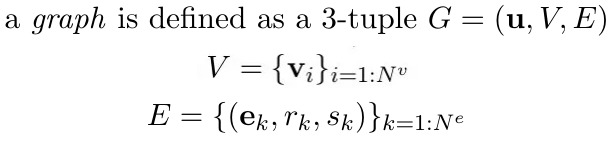

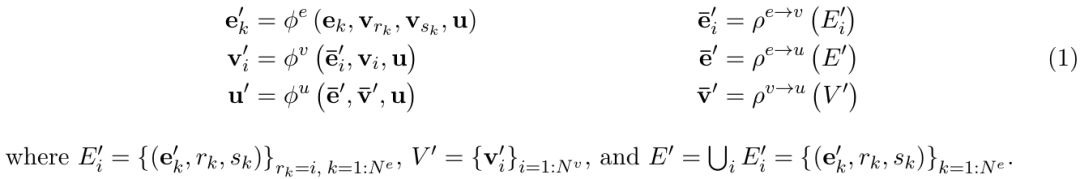

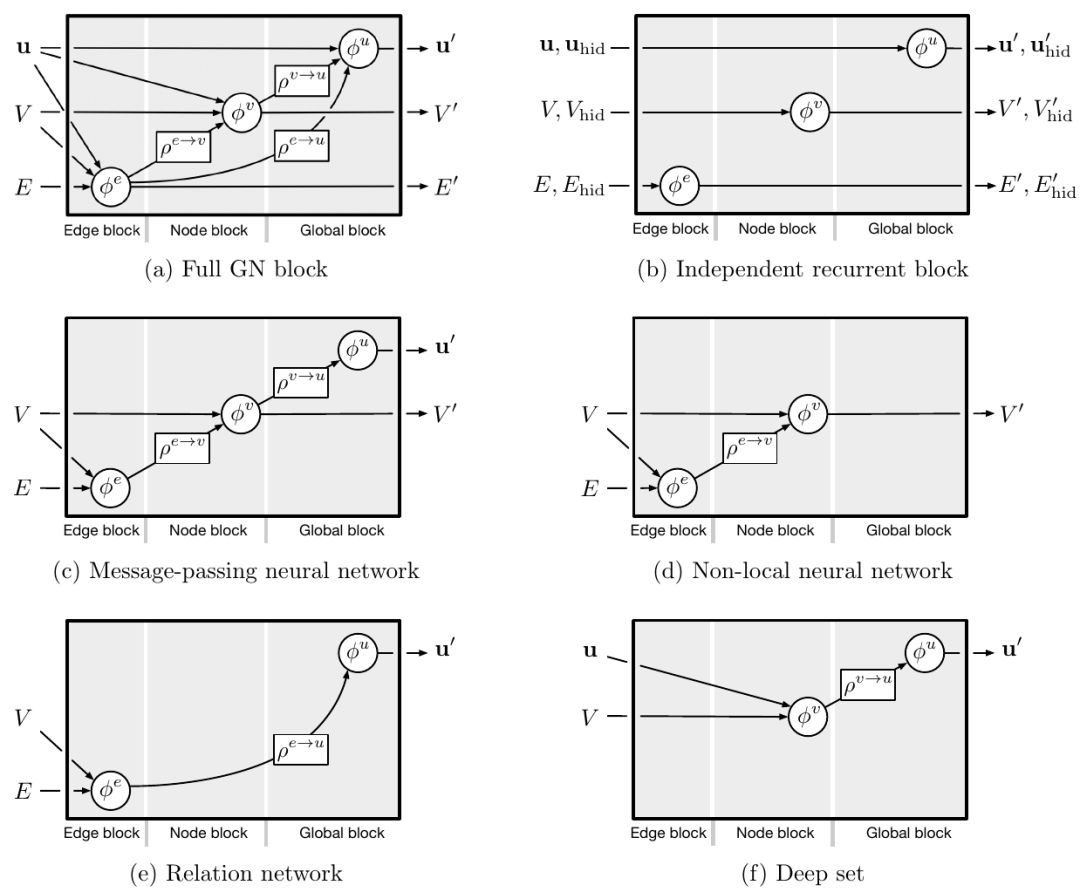

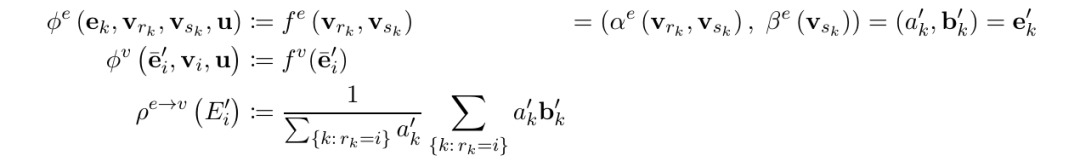

1.2.2. Definitions

- u. global attribute

- V. set of nodes

- E. set of edges

- v_i. the ith node

- e_k. the kth edge

- r_k. receiver of the kth edge

- s_k. sender of the kth edge

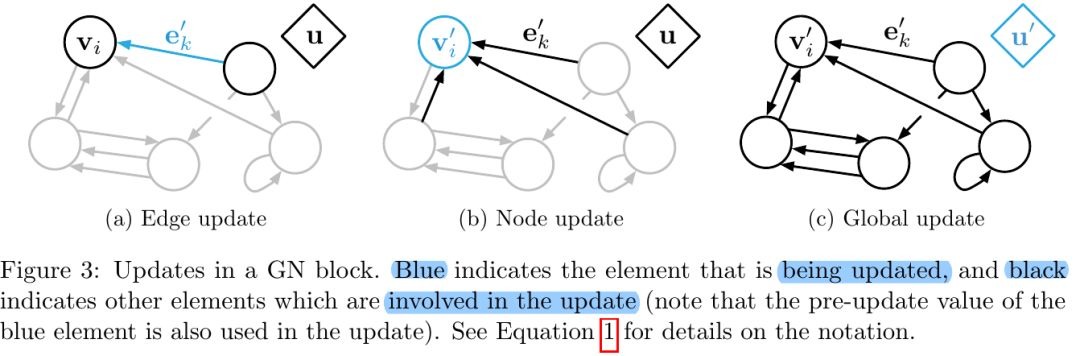

1.3. Design Principles

- flexible representation

configurable within-block structure

composable multi-block architecture

1.4. Discussion

- combinatorial generalization. shared computations across the entities and ralations to reason never-before-seen system

- question. where do get graphs come from that graph networks operate over?